Towards a Gradient Syntactic Model for Unsupervised Parsing

A PhD dissertation draft

You can find the .pdf version here.

Abstract

Currently, the world stores and exchanges unprecedented amounts of information in the form of text; however, although we also have unprecedented amounts of computing power, our ability to process this information is limited by our ability to computationally decode the grammar of human language. There are, in fact, algorithms that can recognize syntactic patterns in language, and learn to do so for any language, by training on text samples alone, without referencing the correct syntactic analyses. Such algorithms are called unsupervised parsers.

Unsupervised parsers do not perform well enough yet for widespread use. Most work by assuming that the unruly probabilistic patterns of language are generated by much simpler hidden syntactic structures. To infer these hidden structures, the parsers first observe words in the training text, then infer the underlying syntactic structure — usually a phrase-structure tree or a dependency tree — that most likely generated each sentence. These results are then evaluated against syntactic structures annotated by human linguists. This has proven to be a difficult task. Inference techniques have become increasingly sophisticated, but performance has yet to improve significantly.

Rarely explored is the possibility that the problem may lie in the oversimplicity of the syntactic structures themselves. The conventional approach imposes clean categories onto the messy complexity of human language, forcing words with different syntactic behaviour into uniform parts of speech, labeling phrases with different properties as constituents, and recognizing different kinds of syntactic relations as dependencies. The resulting categories and structures may be simple in form, but their diverse membership make them difficult to either identify or interpret. Indeed, disagreement over such analyses is common even among linguists, whose analyses form the “gold-standard” that parsers are evaluated against.

What we need is a model that can describe greater variation with simple, precisely-defined concepts. To this end, I propose the Gradient Syntactic Model. Instead of parts of speech, this model measures the degree of similarity between words based on the number of shared contexts. Each word has a unique neighbourhood of words whose similarity to it lies within a certain threshold. The model also quantifies the coherence of phrases, by comparing their relative frequencies to what they would be if their component words occurred independently. Besides also having neighbourhoods, phrases have two similarity metrics. Lastly, the model measures conditional probabilities between all combinations of words, phrases, and their neighbourhoods. These are used to calculate the sentence’s grammaticality score.

The Gradient Syntactic Model provides a gradient analysis much richer than discrete structures, based on well-defined concepts. It is by no means complete, and much work remains. Nevertheless, it points out a new way forward in unsupervised parsing, towards the use of gradient models that are better suited to natural language processing.

Preface

While this thesis draft was never defended, and did not help me graduate, I would still like to share it. For one thing, I spent more than three years on it. But more importantly, it develops an idea whose value I still believe in strongly: that using quantitative gradient measures, in place of conventional categories and binary relations, can increase the predictive power of linguistic models, and allow them to power the use of natural (human) language by machines.

Conventional discrete models were designed to help linguists analyze and compare language structure. They do this by simplifying the complexity of language, providing a high-level but low-resolution view. Today, computational algorithms that analyze grammatical structure, known as parsers, still mostly rely on these discrete models. But in terms of power, these models cannot compare to the language models in our brains, which allow us to actually produce and understand language on a daily basis.

Since I began this work in 2014, many exciting developments have taken place in the field of language modeling. In February 2019, the research institute OpenAI released GPT-2, a deep-learning language model that can reportedly create astoundingly coherent pieces of writing, just by starting from a short phrase or sentence and then continuously predicting the next word. While commercial applications are still elusive, the potential of this technology is great, to the point of being somewhat unsettling.

Meanwhile, this quantitative, non-discrete approach to language structure is not only useful technologically, but can also greatly improve our understanding of linguistics. A high-resolution model can reveal patterns and relationships hidden by low-resolution discrete models. It also forces us to be more precise about concepts such as grammatical relations, parts of speech, and word boundaries.

This thesis draft is just an exploratory first step in this direction. There is a lot of work to be done. I am putting my work here on Medium, in the hopes that it might find its way to others who can use it to work towards a better understanding of syntax. That, to me, would be a bigger accomplishment than getting a degree.

Table of Contents

Abstract

Preface

Abbreviations: Penn Treebank Tagset

1 Introduction

1.1 The usage-based approach

1.2 Goal and scope of thesis

1.3 Outline of dissertation

2 Current models in unsupervised parsing

2.1 Parts of speech

2.1.1 In linguistic description

2.1.2 In unsupervised POS-induction algorithms

2.1.3 In unsupervised parsing

2.2 Syntactic relationships

2.2.1 Constituents in linguistic descriptions

2.2.2 Dependencies in linguistic descriptions

2.2.3 Constituents and dependencies in unsupervised parsing

2.3 Rethinking discrete syntactic models

3 Gradient Syntactic Model

3.1 Lexical-syntactic behaviour

3.1.1 Contextual similarity

3.1.2 Evaluation

3.1.3 Discussion

3.2 Phrases

3.2.1 Phrasal coherence

3.2.2 Phrasal comparison

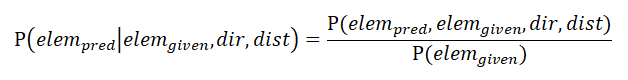

3.3 Conditional probabilities

3.3.1 Conditional probabilities

3.3.2 Grammaticality

3.3.3 Evaluation

4 Conclusions

4.1 Contributions

4.2 The road ahead

References

List of Tables

Table 2.1: Span, label, and context of every constituent in the sentence Factory payrolls fell in September (taken from Klein and Manning 2002:129)

Table 2.2: Performance of various baselines and systems, on various parts of the WSJ corpus (Klein and Manning 2004, Bod 2009, Spitkovsky et al. 2013)

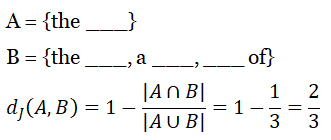

Table 3.1: Context sets for the words replace, affect, and school, from Penn Treebank-3

Table 3.2: Shared contexts among replace, affect, and school

Table 3.3: Jaccard Distances among replace, affect, and school

Table 3.4: Jaccard Distances and POS tags for the closest ten neighbours of each of the ten words chosen for evaluation

Table 3.5: Frequency information and context sizes of the ten words chosen for evaluation, in order from most to least frequent

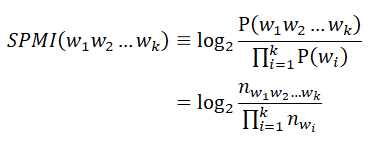

Table 3.6: Repeated sequences of lengths 2–4 with the highest SPMIs in Penn Treebank-3

Table 3.7: Phrases in the sentence, “The luxury auto maker last year sold 1,214 cars in the U.S.”

Table 3.8: 10 phrasal neighbours with the lowest Jaccard Distances to the noun phrase “the summer”

Table 3.9: 10 phrasal neighbours with the lowest Jaccard Distances to the infinitival verb phrase “to find”

Table 3.10: 10 phrasal neighbours with the lowest Jaccard Distances to the adjective phrase “more difficult”

Table 3.11: Frequency, context set size, and maximum number of possible matches for the five phrases chosen for evaluation

Table 3.12: 10 phrasal neighbours with the lowest Jaccard Distances to the adverbial phrase/adjective phrase “very much”

Table 3.13: 10 phrasal neighbours with the lowest Jaccard Distances to the prepositional phrase “in the u.s.”

Table 3.14: 10 phrasal neighbours with the lowest Jaccard Distances to the prepositional phrase “in the u.s.,” without redundant contexts

Table 3.15: 10 phrasal neighbours with the lowest sequential distances to the noun phrase “the role”

Table 3.16: 10 phrasal neighbours with the lowest sequential distances to the verb phrase “has n’t been set”

Table 3.17: 10 phrasal neighbours with the lowest sequential distances to the adjective phrase “partly responsible”

Table 3.18: 10 phrasal neighbours with the lowest sequential distances to the adverbial phrase “more closely”

Table 3.19: 10 phrasal neighbours with the lowest sequential distances to the prepositional phrase “in the u.s.”

Table 3.20: 20 sentences used in the evaluation of conditional probabilities and grammaticality scores

Table 3.21: Phrases in the sentence, “They thought it would never happen.”

Table 3.22: Conditional probability ratios between all pairs of elements or their neighbourhoods in Sentence 1

Table 3.23: Grammaticality scores of the 20 sentences in the evaluation

List of Figures

Figure 2.1: Illustration of an example of an EM algorithm (taken from Do and Batzoglou 2008)

Figure 2.2: A constituency tree for the sentence Factory payrolls fell in September (taken from Klein and Manning 2002:129)

Figure 2.3: The collection of trees and subtrees generated in U-DOP for the sentences watch the dog and the dog barks (taken from Bod 2009:762)

Figure 2.4: A dependency tree for the sentence factory payrolls fell in September (Klein and Manning 2002:129)

Figure 2.5: Steps in the derivation of the dependency tree in Figure 2.4, in DMV

Figure 2.6: Three kinds of parse structures (taken from Klein and Manning 2004:129)

Figure 3.1: Precisions at K for the ten words chosen for evaluation

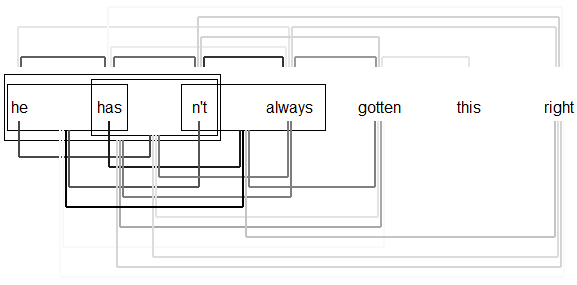

Figure 3.2: How Jaccard Distances are used to calculate sequential distances Figure 3.3: Grammaticality scores of the 20 sentences in the evaluation

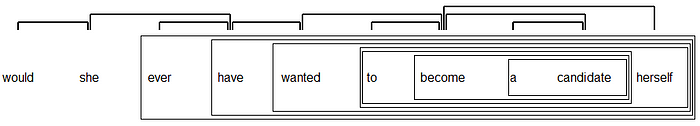

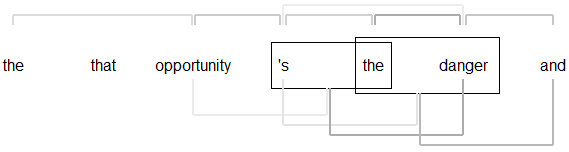

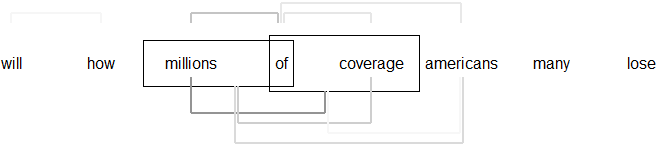

Figure 3.4: Visual representation of gradient analysis, Sentence 1

Figure 3.5: Combined representation of phrase structure and dependency analyses, Sentence 1

Figure 3.6: Gradient and discrete analyses of the 20 evaluated sentences

Abbreviations: Penn Treebank Tagset

The following is a list of the part-of-speech tags used in the Penn Treebank:

ᴄᴄ — Coordinating conjunction

ᴄᴅ — Cardinal number

ᴅᴛ — Determiner

ᴇx — Existential there

ꜰw — Foreign word

ɪɴ — Preposition or subordinating conjunction

ᴊᴊ — Adjective

ᴊᴊr — Adjective, comparative

ᴊᴊꜱ — Adjective, superlative

ʟꜱ— List item marker

ᴍᴅ — Modal

ɴɴ — Noun, singular or mass

ɴɴꜱ — Noun, plural

ɴɴᴘ — Proper noun, singular

ɴɴᴘꜱ — Proper noun, plural

ᴘᴅᴛ — Predeterminer

ᴘoꜱ — Possessive ending

ᴘʀᴘ — Personal pronoun

ᴘʀᴘ$ — Possessive pronoun

ʀʙ— Adverb

ʀʙʀ— Adverb, comparative

ʀʙꜱ— Adverb, superlative

ʀᴘ— Particle

ꜱʏᴍ — Symbol

ᴛo — to

ᴜʜ — Interjection

ᴠʙ — Verb, base form

ᴠʙᴅ — Verb, past tense

ᴠʙɢ — Verb, gerund or present participle

ᴠʙɴ — Verb, past participle

ᴠʙᴘ — Verb, non-3rd person singular present

ᴠʙz — Verb, 3rd person singular present

wᴅᴛ — Wh-determiner

wᴘ — Wh-pronoun

wᴘ$ — Possessive wh-pronoun

wʀʙ — Wh-adverb

«Il ne faut pas avoir peur d’aller trop loin, car la vérité est au-delà.»

“We must never be afraid to go too far, for truth lies just beyond.”

— Marcel Proust

1 Introduction

In the last few decades, as computer and internet usage has become increasingly widespread, a vast quantity of information in the form of digitized text has become readily available. This has led to the increasing popularity of corpus linguistics, the study of language through the analysis of language corpora. No longer do linguists have to rely solely on intuition to judge the prevalence of grammatical patterns.

There remains, however, a need for tools that can enable more sophisticated forms of corpus analysis. In particular, there is currently still no reliable way of automatically identifying syntactic patterns in unannotated corpora. For example, to determine how many uses of a certain verb are transitive and how many are intransitive, linguists must still rely on manual annotation. For many languages without access to linguistic resources, this is not a readily-available option.

One tool that can satisfy this need is parsers, algorithms that analyze the syntactic structure of sentences. Among parsers, those that can train on unannotated corpora are known as unsupervised parsers. Unsupervised parsers can be deployed in any language for which raw text is available in large quantities.

They carry enormous potential both for our understanding of language and for our ability to process it. For linguistics, they can facilitate the development of syntactic theories by providing a way to test hypotheses. This feedback, which is currently lacking, is essential for improving our understanding of linguistics.

On the technological side, the applications of unsupervised parsers are numerous. With the increase in the quantity of digitized text comes the need for tools to help process it. Current natural language processing (NLP) tools, such as search engines and machine translators, do make use of statistical properties of words and phrases; however, so far they have not been able to take advantage of syntactic structure. A syntactic model can significantly boost the effectiveness of these existing tools. Machine translation results can become more coherent; search engines can recognize queries as coherent phrases or sentences, instead of merely a structureless string of words; information extraction algorithms can better identify relationships between entities in sentences; and so on.

Despite significant improvements since the beginning of their development in the early 1990s, unsupervised parsers are not yet widely used. One major obstacle to their progress is their reliance on discrete syntactic models. In these discrete models, words are classified into part-of-speech categories, then either grouped into nested phrases to form phrase-structure trees, or put into dependency relations to form dependency trees. All three concepts — parts of speech, phrases, and dependencies — are discrete: a word either belongs to a part-of-speech, or it does not; either a sequence of words forms a phrase, or it does not; either a word is in a dependency relationship with another word, or it is not.

This discrete approach may be adequate in descriptive linguistics, where linguistic analyses are designed to be understood by human readers. However, it is ill-suited for parsers, whose output is meant to be used by other NLP applications. First, in order to fit language into neat discrete categories and relationships, discrete models discard information that is vital to an accurate analysis. Second, the analyses constructed by discrete models are based on higher-level and vaguely-defined concepts. In short, it takes detailed measurements from text, then simplifies them to create an ill-defined analyses.

Both NLP and linguistics can benefit greatly from reliable unsupervised parsers. To achieve this, we need a more precise model of syntax that can take full advantage of the large amount of linguistic data currently available. It is to this end that I propose the Gradient Syntactic Model.

1.1 The usage-based approach

A major inspiration for the Gradient Syntactic Model is the usage-based approach in linguistics, as exemplified by Bybee (2010). The usage-based approach views language as a dynamic process, rather than a static structure. Bybee (2010) illustrates in detail how this dynamic view of language can account for both diachronic changes in linguistic structures and their synchronic variation.

This model of language as a dynamic process leads naturally to the concept of gradience in linguistic structure. As Bybee (2010:1) writes, “Language is … a phenomenon that exhibits apparent structure and regularity of patterning while at the same time showing considerable variation at all levels.” While giving the illusion of discreteness, language patterns are in reality gradient and context-dependent. For example, differences in lexical-syntactic behaviour are not categorical, as parts of speech suggest; Bybee shows how auxiliaries in English diverged from finite verbs gradually, through the gradual increase in the usage frequencies of modals and the verbs be, have, and do (120–135). Meanwhile, phrases and morphologically-complex words vary in their degree of unity, depending on how their frequencies as a unit compare to the frequencies of their component morphemes (46–50). Thus, it is through gradient differences that both synchronic variations and diachronic changes occur.

The usage-based approach thus inspires the use of gradient syntactic models in unsupervised parsing. Gradient syntactic models offer at least two major improvements to conventional syntactic models. First, by recognizing gradient rather than categorical differences between words and phrases, they provide greater descriptive power. Discrete structures can be described precisely with a gradient model, but gradient structures often cannot be described by discrete models without loss of information. Second, by using gradience to account for complexity, gradient models can use quantitative concepts that are easier to define. For example, in a discrete phrasal analysis, a phrase is either a constituent or not. However, this decision is based on many different criteria which are not necessary and sufficient, and which serve more as guidelines. Thus, discrete phrasal analyses leave room for uncertainty. In contrast, in a gradient model, phrases (called chunks in Bybee 2010) are given much more room to vary; specifically, they can vary in their frequency of occurrence relative to those of their component words. The higher the relative frequency of a chunk is, the stronger it can be judged as a unit. This gradient variation is much greater than the binary choice between constituency and distituency, but in return it is much precisely-defined.

With its use of precisely-defined quantitative measurements, the usage-based approach is a natural fit for a computational syntactic model. This dissertation, then, is an exploration of this possibility.

1.2 Goal and scope

The Gradient Syntactic Model is a first attempt at a new kind of syntactic model, one that is designed for natural language processing rather than human readers. It aims to use only precisely-defined concepts, in order to predict syntactic patterns as accurately as possible. Together, these two design goals lead to a model that uses a small number of simple, rigorously-defined gradient relationships to capture a large number of syntactic patterns.

The version of the Gradient Syntactic Model described and implemented in this dissertation is meant to be a feasibility study and prototype, rather than a definitive model. There are several limitations to the current model. The first is the size of the training corpus. In order to speed up the development process, the training corpus (Penn Treebank-3) was deliberately chosen to be small (933,886 tokens). This, however, necessarily affects the model’s performance. Of course, much larger corpora are available, and once the implementation has been better optimized, it can hopefully be trained on significantly more data.

In addition, the lack of standard gradient analyses makes evaluation challenging. The Gradient Syntactic Model is designed to measure different information from conventional discrete models, and so their analyses are difficult compare. An ideal evaluation for the Gradient Syntactic Model would measure the performance boost it lends to other NLP applications; however, this lies outside our current scope. Nevertheless, in some places, we can gauge the quality of gradient analyses by examining how well they align with discrete analyses and expected results. Towards the end, we will evaluate the gradient model comprehensively by rating the grammaticality of sentences with a grammaticality score, then judging how well this grammaticality score differentiates between grammatical and ungrammatical sentences.

Thirdly, as implied in its name, the Gradient Syntactic Model only models syntactic relationships, those between words in a sentence. It therefore excludes all other areas of language — phonetics, phonology, morphology, semantics, and pragmatics — though some non-syntactic information may be captured indirectly. Since syntax interacts with all these other aspects of linguistics to some degree, the syntactic analysis that this model can provide will not be complete.

Finally, although this dissertation describes the implementation of a linear training process, the training of the Gradient Syntactic Model was originally conceived as an iterative process. The training process described herein constitutes only the first iteration of training. In subsequent iterations, the model can be improved by incorporating measurements and analyses made in the first one. Unfortunately, due to time constraints, this iterative training process will have to be implemented in the future.

Many other areas in the Gradient Syntactic Model can be further development; the most important ones will be discussed in the Conclusion. The main purpose of this dissertation, however, is to lay the foundation for the Gradient Syntactic Model. The task of revealing the model’s full potential will be left to future efforts.

1.3 Outline of dissertation

Chapter Two describes conventional discrete syntactic models, and how they have been used in unsupervised parsing. In the process, it will also examine the model’s appropriateness for unsupervised parsing. Next, Chapter Three describes the Gradient Syntactic Model by dividing it into three modules: Lexical-Syntactic Similarity, Phrases, and Conditional Probabilities. For each module, it describes the metrics and concepts that are used, presents results from its implementation, and evaluates these results. Finally, Chapter Four offers a general assessment of the entire model, and points out directions for further expansion of the model in the future.

2 Current models in unsupervised parsing

Most unsupervised parsers are based on discrete syntactic models that rely on three concepts from linguistics: parts of speech, constituents, and dependencies. Parts of speech categorize words based on their morphological, syntactic, and semantic properties. Constituents and dependencies indicate syntactic relationships between words: constituents are sequences of words (usually contiguous) that behave as a unit, while dependencies are syntactic relationships between pairs of words, one being the head, and the other its dependent.

As widely used as discrete models are in unsupervised parsing, they were originally developed for quite a different purpose. In linguistics, the purpose of these models has been to provide a theoretical framework for the description of syntax. They were developed for no purpose other than linguistic description, though sometimes they were incorporated as a part of more comprehensive theories of language, such as Generative Grammar. By the time these models were adapted to unsupervised parsing, they had already been used by linguists for some time, from almost a century in the case of constituents, to several millennia in the case of parts of speech.

For linguistic description, discrete models have their advantages: they are intuitive and easy to grasp, and they can be applied to a wide variety of syntactic patterns in languages with very different typology. However, as models in unsupervised parsers, they have two serious drawbacks: being discrete, they oversimplify the heterogeneity of the patterns they try to describe; and, being high-level, they are complex and difficult to define precisely. Together, these problems not only make discrete structures difficult for algorithms to infer, but also severely limit their usefulness. Unsupervised parsers are thus given the task of simplifying rich linguistic data in a complex yet ill-defined way.

In this chapter, we will discuss the three discrete linguistic structures currently used in unsupervised parsers: parts of speech, constituents, and dependencies. We will also see how these structures are used by unsupervised POS-induction algorithms and unsupervised parsers, using previous work to illustrate. We will compare the performances of some of this previous work, stretching back to the early days of NLP. Finally, informed by the history of the problem and its proposed solutions, we will revisit the question of the appropriateness of discrete models for the task of unsupervised grammar induction, and discuss the way forward.

2.1 Parts of speech

Parts of speech are categories of words that share morphological, syntactic, and semantic properties. Their use in linguistics stretches back millennia, and they are currently still widely used both in linguistics and in NLP, including in unsupervised parsing. However, the convenience of using discrete categories to characterize lexical properties comes at the cost of obscuring both differences between words within categories and differences between categories.

In this section, we will investigate the nature of parts of speech and their suitability for unsupervised parsing. Additionally, we will examine how unsupervised part-of-speech induction algorithms infer parts of speech. These algorithms provide a starting point for the learning of lexical properties in the Gradient Syntactic Model.

2.1.1 In linguistic description

Words in a language exhibit a variety of morphological, syntactic, and semantic properties, which are collectively referred to as lexical properties, or lexical behaviour. Though lexical properties do not generally fall neatly into categories, some of them can be used to group words into a small number of categories, which we now know as parts of speech. Through categorization (or more generally, generalization), parts of speech provide a convenient way of describing the properties of a word. This is especially useful for words that occur too rarely for enough information to be gathered about them.

As far back as the 5th century BC, the ancient Sanskrit grammarian Yāska classified Sanskrit words into four main parts of speech: nouns (nāma), verbs (ākhyāta), verbal prefixes (upasarga), and invariant particles (nipāta) (Matilal 1990). Later, in a treatise called The Art of Grammar dating from the 2nd century BC, the Greeks came up with eight parts of speech: nouns, verbs, participles, articles, pronouns, prepositions, adverbs, and conjunctions. With only a few minor modifications, these categories have remained as parts of speech used for English today. In Generative Grammar (e.g. Radford 1988), parts of speech — or “word-level categories” — came to be modeled in Universal Grammar as underlying categories, to which all words in all languages fundamentally belong.

But while it is always possible to categorize words by their lexical properties, these resulting categories are often quite difficult to define. Beck (2002) examines the advantages and disadvantages of categorizing words using morphological, syntactic, or semantic properties alone. To the untrained observer, out of all the different kinds of lexical properties, semantic properties are the most salient. For example, a noun is commonly described as a “person, place, or thing,” while a verb is typically thought of as an “action word.” But while such descriptions do fit the most typical examples of nouns and verbs (e.g. tree for nouns, and run for verbs), many words do not fit these descriptions. Many nouns do not refer to people, places, or things, but instead refer to qualities, such as warmth, or ideas, such as democracy. Similarly, many verbs describe events that are not actions, but states; examples include be, have, and endure. More importantly, a categorization scheme based on language-independent semantic properties alone would neglect language-specific morphosyntactic properties, something that parts of speech were designed to do in the first place.

It is also problematic to define parts of speech solely by morphosyntactic properties. Here we run into the opposite problem: morphosyntactic properties are language-specific, yet parts of speech such as nouns and verbs are identified in many different languages. The fact that words in different languages can be identified as nouns suggests that they share some semantic characteristics. Relying solely on morphosyntactic properties to define parts of speech neglects their striking semantic similarities.

The fact that similar parts of speech have been identified in a wide variety of languages reflects a strong tendency for language-specific lexical-morphosyntactic properties to align with language-general lexical-semantic properties. Only semantic properties are shared across languages, while morphosyntactic properties pertain to specific languages. But it is the association of morphosyntactic properties with similar semantic properties that give rise to similar parts of speech across languages. Theoretically, this generality is far from inevitable — we could easily imagine an alternate scenario where lexical-morphosyntactic properties had little correlation with lexical-semantic ones. In that case, all parts of speech would necessarily be language-specific.

Thus, Beck (2002) finds that even though parts of speech can be compared cross-linguistically, neither morphology, syntax, nor semantics is sufficient by itself to adequately define each part of speech cross-linguistically. To remedy this, he proposes definitions for nouns, verbs, and adjectives that make use of both semantic and syntactic criteria (morphological criteria were left out because morphology is not significant in all languages). A noun, for example, is defined as an element expressing a semantic name — “a conceptually-autonomous meaning referring to an individual, discrete, or abstract entity” — which can be a syntactic dependent without further measures (76–78). (Syntactic heads and dependents will be discussed later in the chapter, in the description of dependencies.)

Such definitions are sufficiently precise for linguistic analyses written for human readers. However, for unsupervised part-of-speech (POS) induction algorithms, these definitions are conceptually too high-level. Algorithms — at least initially — cannot determine if a word is “conceptually-autonomous,” having no access to this level of semantics; nor, as unsupervised algorithms, do they have information about whether a word is a syntactic head or dependent, since this requires access to dependency trees. Similarly high-level information would be required to determine if an element can be a head or a dependent without further measures.

For a computational model of lexical properties, a different approach is therefore necessary. Since high-level computational semantic models are not yet available, in the meantime, a computational lexical model should leave out semantic properties, and focus on morphosyntactic properties. Furthermore, since computational models are capable of storing vast numbers of precisely quantified relationships, they would not need to rely on discrete categories to simplify the great variety of lexical properties in a language, as humans do.

A glimpse of a possible alternative can be seen in Bybee (2010). Instead of treating parts of speech as discrete categories and attempting to establish criteria for them, Bybee (2010) adopts a more emergent view of parts of speech. To her, words are fundamentally heterogeneous, but share similarities that suggest family resemblance structures (Wittgenstein 1953). Some words can be considered more typical of a pattern, while others are more marginal, depending on the relative frequency with which they display the properties shared by other words in the same part of speech. None of these properties, however, are necessary or sufficient for membership. Parts of speech are not seen as fundamental static categories, but rather emergent categories that are constantly in flux.

As we will see, this view of parts of speech is more in line with the strategies used in unsupervised POS induction algorithms, which infer parts of speech from unannotated corpora.

2.1.2 In unsupervised POS-induction algorithms

While linguistic definitions of parts of speech are conceptually too high-level to be useful in algorithms, computational linguists have found other ways to infer parts of speech, using information that algorithms have ready access to. Most unsupervised POS-induction algorithms employ one of three general strategies. The first strategy can be described as an optimal classification search. An algorithm starts off with some initial categorization, the simplest of which is to place each word in its own word class. Then, a word is moved to another category, if doing so leads to an improvement; an improvement is usually defined as an increase in the probability of the training text. The probability of each word in the text, assuming a bigram model, can be calculated with the formula

where wᵢ is the word in position i in a sentence, and cᵢ is the word class of wᵢ (Brown et al. 1992). Two notable algorithms that employ this general strategy are Brown et al. (1992) and Clark (2003), the former being the earliest POS-induction algorithm of note in the literature. Both algorithms seek to find a categorization that maximizes the probability of the training corpus. Both algorithms assume some fixed number of word classes.

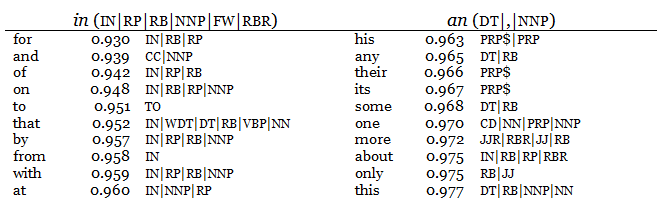

The second strategy, which I will call context vector clustering, is exemplified by Finch and Chater (1992) and Schütze (1995). In Schütze (1995), the 250 most frequent words in the training data are used as context words. Then, the algorithm records the number of times each of those context words occurs immediately on the left or right of each of the remaining words in corpus, so that each of these words has a left and right context vector. These left and right context vectors are then concatenated into 500-dimensional vectors, which is then reduced to 50-dimensional vectors using SVD (Singular Value Decomposition, a method of dimension reduction). These 50-dimensional vectors are finally clustered into 200 word classes. Similarly, to classify high- and medium-frequency words, Biemann (2006) uses the 200 most frequent words in the training corpus as context words, and characterizes each word by the number of times these context words occur immediately on the left and right of it. However, his algorithm clusters words somewhat differently, by putting each word into the class whose members have the greatest total similarity score to it. This way, the number of clusters does not need to be predetermined.

The third strategy uses Hidden Markov Models, or HMMs (see Blunsom 2004 for an introduction). One of the earliest unsupervised parser to use an HMM is Merialdo (1994), who implements a trigram HMM. The trigram HMM makes two approximations: that the probability of each part-of-speech tag depends only on the tags of the previous two words, and that the probability of each word depends only on its POS tag. The algorithm then chooses the POS tags that maximize the likelihood of the training corpus. More recent efforts tend to combine HMMs with more sophisticated machine-learning techniques, and can be described as augmented HMMs; these include Goldwater and Griffiths (2007), Johnson (2007), Graca et al. (2009), and Berg-Kirkpatrick et al. (2010).

Which strategy holds the most promise, and how well does it do? Christodoulopoulos et al. (2010) provide a careful evaluation of representative studies over the previous two decades, performing benchmark tests on seven unsupervised POS-induction algorithms: Brown et al. (1992), Clark (2003), Biemann (2006), Goldwater and Griffiths (2007), Johnson (2007), Graca et al. (2009), and Berg-Kirkpatrick et al. (2010). While each paper provides an evaluation of its own system, Christodoulopoulos et al. (2010) subjects all systems to the same tests. Moreover, in addition to testing the systems on the WSJ corpus, which most of the systems were developed on, the evaluation also tests them on translations of a multilingual corpus, Multext-East, in eight different European languages. After weighing various methods of measuring how well the induced clusters match gold-standard tags, the authors found that an entropy-based metric, V-measure, to be the most reliable (Rosenberg and Hirschberg 2007).

According to the benchmark tests, the algorithm that performs the best on corpora from eight different European languages was Clark (2003), with Brown et al. (1992) and Berg-Kirkpatrick et al. (2010) following close behind. The study also introduces a new method of evaluation. From each cluster induced by each system, words were selected heuristically as prototypes. These prototypes were then fed into another POS-induction system, Haghighi and Klein (2006), which uses these prototypes to induce new clusters. This was partly motivated by the possibility that this method of induction may yield better clusters than each of the algorithms by itself. Here, Brown et al. (1992) emerged as the winner.

Two observations can be made about these benchmark results. First, as the authors note, the older algorithms outperform many of the more recent ones. Brown et al. (1992) and Clark (2003), both employing the first strategy, optimal classification search, are the two oldest systems in the evaluation, yet are among the best performers overall, rivaled only by the newest system that was reviewed, Berg-Kirkpatrick et al. (2010). Second, even the winning systems are fairly limited in their performance. Christodoulopoulos et al. (2010) includes an evaluation of all seven systems on WSJ, which is the corpus that most of the systems were developed on, and which should therefore elicit the best results. Still, none surpasses 70% in V-measure — significantly better than the baseline of grouping each word in its own cluster (35.42%), but still a long way from the 95.98% reached by the Stanford Tagger, a supervised system.

The more recent unsupervised POS taggers, the augmented HMMs, tend to concentrate on refining statistical techniques. The increasing complexity of these techniques is reflected by the runtimes of the systems reported in Christodoulopoulos et al. (2010). While the earlier, non-HMM systems finish within an hour, the augmented HMM systems take anywhere from about 4 hours in the case of Goldwater and Griffiths (2007) to about 40 hours in the case of Berg-Kirkpatrick et al. (2010). Furthermore, the benchmark results suggest that this increase in complexity is not matched by a corresponding increase in performance.

The diminishing returns in the progress of unsupervised POS-tagging calls for some reflection. Parts of speech have been shaped partly by factors that the algorithms have no access to. These considerations include the semantic properties of words, the cognitive biases of linguists who choose which criteria are important, and historical accidents that have influenced how words have been categorized. Meanwhile, POS-induction algorithms only have access to a text corpus. Therefore, there may be a theoretical limit to how closely the results from these algorithms can align with traditional part-of-speech classifications.

It is also important to remember what parsers use parts of speech for. Parsers use them to help learn the syntactic behaviour of low-frequency items, and to focus its attention on the syntactic relationships between words, rather than on the semantic ones. The solution that best serves these purposes may not be the parts of speech that linguists have bequeathed to NLP; indeed, it may not involve categories at all. While categorization has the advantage of simplifying the diversity of lexical behaviour, parsers, unlike human linguists, benefit more from precision than from simplicity. Since every word is to some extent unique, imposing homogeneous categories onto heterogeneous words necessarily obscures their unique properties, thereby compromising precision.

Evidence of the benefits of gradient models comes from Schütze and Walsh (2008), who create a gradient model of lexical-syntactic behaviour using left and right context vectors of each word (termed half-words), similar to Schütze (1995). They first represent sentences in their training data as sequences of these half-words. Then, they use their representations of the sentences to evaluate the grammaticality of unseen test sentences, by determining whether all subsequences of a certain length in the sentence are adequately similar (defined by some threshold) to any sequences stored in training.

In their evaluation, they apply their graded model to 100 grammatical sentences (drawn from the CHILDES corpus, a corpus of conversations with children) and 100 ungrammatical sentences (randomly generated using vocabulary from CHILDES). For comparison, they also build a categorical model based on Redington et al. (1998), in which words are represented by their place in a hierarchical cluster, built based on contextual information similar to Schütze’s half-words. Schütze and Walsh (2008) find that their graded model significantly outperforms the categorical one. These results support the possibility that graded representations of lexical-syntactic behaviour may be more informative than categorical ones, and may in particular lead to better performances in parsing.

2.1.3 In unsupervised parsing

Despite having different goals from descriptive linguistics, unsupervised parsing also relies heavily on parts of speech. Parts of speech provide important information about the behaviour of low-frequency words in training. To learn how words behave syntactically, parsers need to observe repeated instances of them — the more instances the better, since every instance reveals additional information about the word’s behaviour. Parts of speech generalize the properties of high-frequency words to low-frequency words with similar properties, especially lexical-syntactic properties.

Moreover, the lexical-syntactic information provided by parts of speech allows parsers to infer syntactic relationships between words. The frequent co-occurrence of two words in the same sentence is associated not with the existence of a syntactic relationship between the words, but rather with semantic similarity.¹ However, by comparing co-occurrence frequencies of the part-of-speech tags of words rather than of the actual words themselves, unsupervised parsers can more easily infer syntactic relationships and avoid semantic ones. In fact, unsupervised parsers often only use the part-of-speech tags of words in their training corpora, to the exclusion of the actual words themselves. As Spitkovsky et al. (2011) notes, “every new state-of-the-art dependency grammar inducer since Klein and Manning (2004) relied on gold part-of-speech tags.”

One disadvantage in relying on part-of-speech tags is that they generally still require some degree of human effort to produce. Part-of-speech tags in corpora are usually annotated by humans. Alternatively, tags may be generated by supervised tagging algorithms and cleaned up with human labour afterwards. However, these algorithms themselves, being supervised, ultimately still need to train on corpora that are annotated by humans. This reliance on human labour prevents unsupervised parsers from being completely free of human assistance.

Klein and Manning (2005) address this problem by performing their own unsupervised part-of-speech induction, before training their unsupervised parser (CCM, which will be introduced later in the chapter). Using a context-vector clustering strategy similar to Finch and Chater (1992), they cluster the vocabulary in the training corpus into 200 word classes, then use labels for these classes to represent words in training. The result is a noticeably lower F1 score (63.2%) than that achieved with human-annotated POS tags (71.1%), though still above the right-branching baseline F1 score of 60.0%.²

Later, Spitkovsky et al. (2011a) show that inducing parts of speech with an unsupervised system need not lead to worse performance. As a starting point, the authors take their own improved version (Spitkovsky et al. 2011b) of a dependency parser, the Dependency Model with Valence (DMV) (Klein and Manning 2004, which will be described in more detail shortly). They then replace gold-standard POS tags in the training data with labels of word clusters induced by an unsupervised POS-induction algorithm (Clark 2000). The effect of this replacement is only a very slight dip in accuracy. As an additional experiment, they allow words to belong to more than one part of speech, with its part of speech partially-conditioned by its context. Each word has a 10% probability of having its word cluster label replaced by a tag randomly selected from the left-context tags of the following word. With another 10% probability, it receives a tag sampled from the right-context tags of the previous word. Even with this crude approximation of context-sensitivity, accuracy increases, even slightly surpassing the system’s original performance with gold-standard tags (59.1% vs. 58.4%).

¹ This is in fact the principle behind Latent Semantic Analysis: the degree of semantic similarity between two words is indicated by what words they occur with in the same document (which is often taken to be the sentence), and at what frequencies (Deerwester et al. 1990).

² F1 is the harmonic mean of precision and recall, or

2.2 Syntactic relationships

Linguists use various formalisms to represent syntactic analyses, the two most common being constituents and dependencies. Like parts of speech, constituents and dependencies are discrete concepts. More specifically, they are binary relations: two words are either in the same constituent or not, and either in a dependent relationship with each other or not. The simplicity of these two formalisms has made them popular as syntactic models for unsupervised parsers.

However, as with parts of speech, it is difficult to define exactly what constituents and dependencies are, and consequently they are not straightforward to identify. The criteria used to identify them are neither necessary nor sufficient, and sometimes lead to disagreements about the correct analysis. This implies that the phenomena represented by constituents and dependencies are not in fact discrete, but gradient.

2.2.1 Constituents in linguistic descriptions

Intuitively, a constituent is a word sequence, usually (though not always) contiguous, that behaves as a unit. Radford (1988:90), working in the framework of Transformational Grammar (part of the larger theory of Generative Grammar), proposes eight diagnostics for constituency, which tests whether or not a word sequence behaves like an independent unit: substitution, movement, sentence-fragment, adverbial interposition, coordination, shared constituent coordination, pronominal substitution, and ellipsis. All these transformations are designed to test the degree of independence and unity of the constituent candidate.

Each diagnostic produces a transformed version of the original utterance that is then judged for grammaticality; if it is grammatical, then the phrase being tested for constituency passes that diagnostic. For example, the sentence-fragment diagnostic tests whether or not a candidate can stand alone as a sentence fragment. In his example sentence drunks would get off the bus, to test whether the phrase get off the bus is a constituent, we try to use the phrase as a sentence fragment. Since get off the bus is a valid sentence by itself, the phrase passes the sentence-fragment diagnostic.

In practice, however, identifying constituents is sometimes not so straightforward, for two reasons. First, different constituency tests can often disagree with each other. For example, Bybee (2010:141) describes an example from Seppänen et al. (1994), which tests whether in spite of is a complex preposition, or whether in spite is a constituent within it. To do this, they use four diagnostic tests. Even though in spite fails one out of the four tests, Seppänen et al. (1994) determine that this is enough evidence to make it a constituent. This decision, however, rests on a rather arbitrary decision about how many failed diagnostics is tolerable.

Second, sentences can vary in grammaticality by degrees, implying that the results of each diagnostic are not binary. For example, Bybee (2010:141–143) describes the “made-up sentences” used in Seppänen et al. (1994) as sounding “literary and stilted.” She then looks for real-life examples in the Corpus of Contemporary American English (COCA), and finds that for all three diagnostics that the phrase in spite is supposed to have passed, there are actually more examples in COCA where in spite of behaves as an indivisible unit, than examples where in spite behaves independently. This shows how, even with such diagnostic tests, the results are gradient rather than binary.

All this shows that constituents are not the discrete units that they have long been considered to be. In contrast, the usage-based approach presents an alternative, one where word sequences can behave like units to different degrees. Their behaviour can be observed by comparing how often the sequence appears as a unit, to how often its component words appear individually.

2.2.2 Dependencies in linguistic descriptions

A syntactic dependency is a directed syntactic relationship between a pair of words in a sentence. One of the pair is known as the head, while the other is known as the dependent or argument. Intuitively, the head is the word that determines the syntactic behaviour of the dependent, and the greater portion of the syntactic behaviour of the pair as a whole. Dependency syntax has a centuries-long tradition among European linguists, but in the 1930s its popularity in North America waned, yielding to Generative Grammar and its use of constituents (Mel’cuk 1988:3). Mel’cuk attributes this partly to the historical accident of English, with its rigid word order, being the language spoken by North American linguists (4–5).

Although there is a consistent element of predictability in dependency relations, identifying dependencies and their heads is not always a straightforward affair. Mel’cuk (1988:129) lays out a series of criteria for this purpose, the main points of which are summarized below (see Mel’cuk 1988:129–140 for examples and details):

- Words that determine each other’s linear position are linked by a syntactic dependency.

- Words that form a prosodic unit (“roughly” equivalent to a constituent) are linked. Alternatively, a word and the head of a prosodic unit are linked, if that word and that prosodic unit together can form a larger prosodic unit.

- In a pair of linked words, the word that determines the greater portion of the syntactic distribution of the pair as a whole is the head.

- In a pair of linked words, the word that determines the greater portion of the pair’s morphological interactions with other elements is the head.

As with constituency, the notion of syntactic dependency is largely an intuitive but abstract ideal that must take shape as more concrete criteria, when applied to actual language. Mel’cuk (1988) attempts to make his criteria as widely-applicable as possible, with characteristic rigor and attention to detail; however, he admits that he is “unable to propose a rigorous definition of syntactic dependency,” calling it a notion that is “extremely important and, at the same time, not quite clear” (129). He further admits that there are syntactic phenomena for which his criteria fail to give a clear answer; for example, in the noun-noun compounds of isolating languages, neither morphology nor syntax points to a clear choice of syntactic head (138) . In such cases, linguists are left to derive new criteria, based on the abstract principle that the head determines the behaviour of the dependent, or of the pair together. Further, since in each instance a different number of criteria can be satisfied, and to varying degrees, the representation of dependencies as a binary relation appears to be an oversimplification.

Beck (2002:77) also proposes criteria for determining syntactic dependencies, as well as heads and the dependent. His criteria can be summarized into a single formulation:

Heads and dependents in a dependency relationship –

An element is the head of another element if it

- requires the presence of (or subcategorizes for),

- permits the presence of (or licenses), or

- determines the linear position of the dependent.

For example, in the sentence I hit it hard, hit, as a finite declarative verb, subcategorizes for both the subject I and the direct object it; both arguments must be present for an utterance containing hit to be complete. Thus, hit becomes the head of both I and it. Meanwhile, the adverb hard, modifying hit, is licensed by hit, since it could not have occurred without it. Finally, the linear positions of the words I, it, and hard are all determined by, and in relation to, the word hit. Thus, hard is the head of the other three words, I, it, and hard.

As in Mel’cuk (1988), the criteria in Beck (2002) show that the notion of syntactic dependency is based on a single intuitive principle, but in practice must be interpreted as a number of different criteria, some of which may conflict with each other. For example, in the noun phrase the tree, tree is usually identified as the head, with the as the dependent. According to Beck’s (2002) criteria, tree (as a singular count noun) requires the presence of the, and determines its linear position. However, it could just as easily be said that the determines the linear position of tree, and that the requires the presence of tree. The choice of tree as the head thus appears motivated by other criteria. And, as in Mel’cuk (1988), the criteria in Beck (2002) would still fail to identify the head in syntactic patterns such as noun-noun compounds with no morphological inflection.

More importantly for unsupervised parsing, the concept of syntactic dependency, as described in either Mel’cuk (1988) or Beck (2002), are too abstract for a computational model of syntax. The concepts used in their criteria — the determination of linear position, prosodic unit, syntactic distribution, morphological interaction, subcategorization, and licensing — cannot be used by a parser as a starting point for acquiring the syntax of a language, because they themselves are part of the language’s syntax. An unsupervised parser, in the beginning, has no choice but to start with observations based on low-level, rigorously-defined concepts.

2.2.3 Constituents and dependencies in unsupervised parsing

Among the unsupervised parsers that have been developed so far, both those using constituents and those using dependencies, most have used what is known as the generative model. As its name suggests, the model assumes the observed data to be generated by a hidden mechanism. The job of a learning algorithm, then, is to infer specific properties about the hidden structure. Often, it assumes that the hidden structure is the one that maximize the likelihood of the observed data.

This section will introduce the generative model, and briefly describe how it has been applied to unsupervised parsing. Then, I will describe the designs and performances of three well-known unsupervised parsers: Constituency-Context Model (CCM, Klein and Manning 2002), U-DOP (Bod 2009), and Dependency Model with Valence (DMV, Klein 2004). All three employ generative models; although U-DOP uses a different training algorithm, its underlying model is still recognizably generative. Finally, I will briefly discuss more recent extensions to the DMV in Spitkovsky et al. (2011a, 2011b, 2012, 2013), which report the best comparable performances to-date.

The Generative Model

The generative model consists of three variable parts: 1) observable data, 2) hidden variables, and 3) parameters. The observable data is assumed to be generated by some hidden mechanism that may be determined by hidden variables. The operation of this hidden mechanism is determined by the parameters.

One simple example illustrating this model involves coin tosses (Do and Batzoglou 2008). A coin is chosen randomly from two coins, A and B. Each coin has a different and unknown probability of coming up heads, e.g. PA(head) = 0.3 and PB(head) = 0.5. Consider these results from a series of ten coin tosses, which are all generated either by Coin A or Coin B:

The coin tosses that create these results is the hidden mechanism; it contains one hidden variable, the coin that is used. The parameters are Pᴀ(head) and Pʙ(head), the probabilities that each coin will come up heads. The values of Pᴀ(head) and Pʙ(head) that make the observed sequence of coin toss results the most likely to occur, then, are the maximum likelihood estimates of the two parameters.

Much of the work in the field of machine learning is devoted to tackling the problem of inferring the hidden variables and the parameters in a generative model, given the observable data. One common solution to solving this problem is to use an Expectation-Maximization (or EM) algorithm, an iterative method that searches for the values of the hidden variables and parameters that maximize the probability of the observed data. Do and Batzoglou (2008) provide a concise example of an EM algorithm, applied to the two-coin example described above. Their illustration of this example is reproduced in Figure 2.1:

The observed data consists of five experiments, each of which uses one of two coins (A and B) to generate a series of ten coin-toss results. The algorithm consists of two steps: the E-step (expectation) and the M-step (maximization). First, the parameters are initialized; Pᴀ(head) (or θᴀ) is initalized to 0.6, while Pʙ(head) (or θʙ) is set to 0.5. Then, in the E-step, the algorithm uses these parameter values to calculate the expected values for the hidden variable, the coin used. This is done by first calculating the probability of each coin being the one used for each experiment; for example, in the first experiment, the probability is 0.449 for Coin A and 0.551 for Coin B. The expected contribution of each coin in an experiment is then calculated by multiplying the probability of each coin by the results, so that Coin A’s contribution in Experiment 1, which has 5 heads and 5 tails, would be 2.246 heads and 2.246 tails. Finally, in the M-step, the parameter values that would maximize the expected values of the hidden variable are calculated as the proportion of the contribution of heads for each coin. With newly updated parameter values, the algorithm repeats, until convergence. The solution is not necessarily globally optimal, but is the local optimum given the initial parameter values. Finding the global optimum may require multiple tries with different initial values.

Generative models are widely used in machine learning in general, and in natural language processing in particular. Out of the three POS-induction strategies described in the last section, generative models in fact form the basis of two of them. Algorithms using the strategies of optimal-classification search and HMM treat the word classes to be inferred as the hidden underlying structure that generates observable words, similar to how one of the two coins in the above example generate results of heads or tails.

When the generative model is applied to unsupervised parsing, the observable data is the training text, with each word being a probabilistic event. The hidden variables are the syntactic structure, while the parameters are the probabilities that a specific word or phrase will be generated. These parameters may be conditional probabilities; for example, in a dependency model, a parameter may specify the probability of a word occurring, given that a certain word is the head, that the dependency generating it is directed towards the left (or right), and that it is immediately adjacent to the head (or not). (This is essentially the parameters used in the DMV in Klein 2004, which will be discussed shortly). Training, then, becomes a matter of finding the hidden structures and parameter values that maximize the likelihood of the observed text.

We can now examine several past parsing models as examples. The first system is the Constituency-Context Model (Klein and Manning 2002), which uses an EM algorithm to infer structure in a generative model.

Constituency-Context Model (CCM)

Being a constituency model, CCM represents the syntactic structure of sentences as binary constituency trees. It uses two groups of parameters: 1) the conditional probabilities of word sequences given a part (or span) of the sentence, and 2) the conditional probabilities of the contexts of these sequences given the span.

Figure 2.2 shows a constituency tree for an example sentence, taken from Klein and Manning (2002:129). Table 2.1, also from Klein and Manning (2002:129), lists all the constituent spans in the sentence, along with their labels, contents (which the authors call yields), and contexts. Sentence boundaries are marked by ◊. Note that Klein and Manning (2002), like most unsupervised parsers, use POS tags in place of the actual words:

The first group of parameters, P(yield|span), specifies the probabilities of various sequences, given a span in the sentence (e.g. the span of factory payrolls would be <0,2>). Spans are further characterized by whether or not they are constituents or not (“distituents”). For example, the span <0,2>, factory payrolls, is a constituent, according to the parse tree in Figure 2.2. The parameter would therefore be the conditional probability P(ɴɴ ɴɴꜱ|<0,2>=constituent). Table 2.1 lists all the spans that are constituents in the tree; all other spans are distituents. The other group of parameters, P(context|span), specifies the probabilities of the contexts of these sequences, where a context is the tag immediately preceding a sequence, plus the one immediately following a sequence. For example, the context of ɴɴ ɴɴꜱ in <0,2> would be ◊―v. The associated parameter would therefore be P(◊―v|<0,2>=constituent).

To generate sentences, the model is assumed to have first chosen a tree (which the authors call a bracketing), then generated words (actually POS tags) based on its constituent spans. Then, to calculate the probability of a sentence, the model sums up the sentence’s probability over all possible trees; these trees are restricted to non-crossing binary trees, and all receive equal probability. The training algorithm, an Expectation-Maximization (EM) algorithm, then calculates what the trees are expected to be given the parameters, as well as the parameter values that maximize the total probability of the sentences in the training corpus. In the testing phase, where the parser encounters sentences not seen in training, these optimized parameter values can then be used to find trees for these unseen sentences.

We can see that in CCM, the more frequently a certain sequence occurs in the training corpus, the more likely it will be found within a particular span, whether it is a constituent or not. This can in fact be shown mathematically. Since all binary trees have fixed, equal probabilities, and are determined before the sentence is known, the properties of a span (its start and end indices, and its constituency) are independent of what the span’s contents are — in other words, P(sequence|span). What distinguishes constituents from distituents, then, is the frequency of their subsequences. In a binary tree, a constituent must also contain within it nested constituents, contiguous subsequences that are also frequent relative to other sequences of the same length. Additionally, these high-frequency subsequences must fit into a binary tree. Distituents, on the other hand, have no such restrictions. Therefore, according to CCM, good candidates for constituency are ones that, in addition to being high-frequency themselves, also contain high-frequency nested, contiguous subsequences.

Klein and Manning (2002) evaluate CCM on a small training set consisting of 7,422 sentences from WSJ-10, the subset of sentences in the Penn Treebank Wall Street Journal corpus that are no more than 10 words long (after removing punctuation). As Table 2.2 shows, CCM performs substantially better than a hypothetical baseline where all parses are purely right-branching (where every subtree consists of one word on the left branch, and the rest of the sentence on the right). It achieves an F1 score of 71.1%, compared to 60.0% for the right-branching baseline. The theoretical upper-bound is actually 87.7%, since CCM is restricted to binary-branching, unlike the parse trees in the Penn Treebank (Klein and Manning 2005).

Klein and Manning (2002) also test CCM on a separate section of the Penn Treebank, the ATIS section (after training on WSJ-10). This evaluation better reflects the algorithm’s performance, since the test set is separate from the training data. Here, it reaches a substantially lower F1 score (51.2%), though still higher than the right-branching baseline for this task (42.9%).

Klein and Manning (2004) also evaluate CCM on a German corpus (2,175 sentences) and a Chinese corpus (2,437 sentences), each also consisting only of sentences with 10 words or less. Its performances on these languages are poorer (F1 scores of 61.6% and 45.0% for German and Chinese respectively), though still comfortably better than their respective right-branching baselines.

Unsupervised data-oriented parsing (U-DOP)

U-DOP is another constituency model. Like CCM, U-DOP generates all possible non-crossing binary trees as candidates for each sentence in the observed text. U-DOP then stores these trees in its memory, along with all the subtrees that they consist of. Unlike in CCM, lexical terminals in U-DOP are treated as part of the tree, so that trees in memory may be partly or fully lexicalized. U-DOP is a generative model, with the hidden variables being its trees, and the parameters being the probabilities of the trees in memory.

Figure 2.3, taken from Bod (2009:762), illustrates the trees and subtrees generated for two sentences, watch the dog and the dog barks. Non-terminals in the trees are marked with X, since they are unknown. Notice that the dog, which occurs in both sentences, is represented twice as an independent tree. During testing, when encountering an unseen sentence, U-DOP draws on the trees in its memory to derive possible trees for it. The probability of a derived tree is the product of the probabilities of all the subtrees that compose it. The best tree is the one with the shortest derivation (i.e. can be composed from the fewest subtrees); where there are ties, the tree with the highest probability is chosen.

At its heart, the strategy of U-DOP is similar to that of CCM, in that high-frequency sequences containing high-frequency contiguous subsequences are the best candidates for constituents in both models. As for their differences, U-DOP has the advantage of being able to learn nonadjacent contexts, since it stores entire trees instead of simply the constituency of linear sequences (Bod 2009:765). For example, U-DOP can store the tree more X than X, where X marks slots for other subtrees (Bod 2009:764). In contrast, CCM only calculates the probabilities of complete sequences, and cannot abstract away portions of it in the same way. Additionally, U-DOP represents contexts more directly compared to CCM, since it stores them within tree structures rather than as separate events (Bod 2009:765).

Bod (2009) evaluates U-DOP on the same corpora as CCM, for ease of comparison. Table 2.2 shows that it comfortably outperforms CCM in English (82.7% F1 vs. 71.9%), as well as the combination of DMV and CCM (77.6%). Its results for German (66.5%) and Chinese (47.8%) also exceed those of CCM. To test how much of U-DOP’s performance in English compared to the other languages is due to the larger training data, Bod (2009:767) trains it on a training corpus of a size more similar to the German and Chinese corpora, with 2,200 randomly-selected sentences. This results in an F1 score of 68.2% ― a significant drop, showing that the amount of training data has a significant effect on performance. Additionally, Bod (2009:767–8) measures the benefit of storing discontinuous subtrees (e.g. more X than X), and finds that F1 scores without them are significantly lower (72.1% for English, 60.3% for German, and 43.5% for Chinese).

Dependency Model with Valence (DMV)

DMV was the first dependency parser to score a higher accuracy rate than a simple right-branching baseline, where the root is the first word of the sentence, and every word takes the word to its right as its sole argument (Spitkovsky et al. 2010). Since then, many unsupervised dependency parsers have been based on the DMV.

DMV is a generative dependency model: the hidden variables are the dependency structures, while the parameters are probabilities that specify how likely various dependencies will be generated. There are two types of parameters: 1) Pᴄʜᴏᴏꜱᴇ(a|h,dir), the probability of choosing a certain argument a, given the head h, and a’s direction relative to h (left or right); and 2) Pꜱᴛᴏᴘ(STOP|h,dir,adj), the probability of stopping the generation of arguments in a certain direction, given the head h, the direction of the stop relative to h, and whether or not the next argument in direction dir will be adjacent to h (in other words, whether or not an argument has already been generated in direction dir). It is the variable adj that expresses the valency information of each head, and it is the representation of this valency information that gives the parsing model its name.

Figure 2.4, taken from Klein and Manning (2002:129), shows a dependency tree for factory payrolls fell in September, the same sentence in the constituency tree in Figure 2.2:

DMV first starts with a special root node, which generates the root to its left by convention. Then, the rest of the tree is generated recursively, depth-first, with right arguments generated before left ones. Before a head generates a dependent, the event is also accompanied by a non-stop event, marking the model’s decision to continue generating dependents in that direction. The probability of this non-stop event is given by the parameter Pꜱᴛᴏᴘ(¬STOP|h,dir,adj). Then, when the head finishes generating dependents in that direction, it is marked by a stop event. Following this derivational strategy to derive the dependency tree in Figure 2.4, we get the following series of events:

From a given root, a structure is generated by identifying the right arguments of that root (if any), then stopping, then its left arguments, then stopping, then repeating this process recursively with each argument, until the whole sentence is covered. These derivational steps are modeled as a series of independent events, whose probabilities can be multiplied to calculate the likelihood of the dependency tree, which also contains the sentence it generates. Like CCM, the training algorithm in DMV uses EM to estimate parameter values; but, unlike CCM, it maximizes the likelihood of the dependency tree together with the observed text.

What ultimately increases the probability that one word will be analyzed as the argument of another word (the head), then, is a high Pᴄʜᴏᴏꜱᴇ(a|h,dir). This means that to increase its chances of being chosen as the dependent of a head, the dependent should occur frequently on same side of the head, wherever the head is found.

Klein and Manning (2004) evaluate the DMV on the same corpora as the CCM. The DMV achieves an accuracy rate of 43.2% if direction is counted, compared to the right-headed baseline accuracy of 33.6%, or 62.7% compared to 56.7% if direction is not counted (Table 2.2). In German and Chinese, too, its accuracy rates surpass adjacent heuristic baselines (40.0% directed and 57.8% undirected for German, 42.5% and 54.2% for Chinese). In terms of F1 scores, the DMV also surpasses those of adjacent heuristic baselines for German and Chinese. However, in English it scores a significantly lower F1 score than the better adjacent-heuristic baseline (52.1% for DMV vs. 61.7% for right-branching).

Klein and Manning (2004) then experiment with combining CCM and DMV. This is possible because a dependency tree, defined formally as a planar, directed, acyclic graph, is in fact isomorphic to a binary-branching constituency tree with contiguous constituents. Klein and Manning (2004:129) illustrate this isomorphy for the same sentence in Figure 2.6a–c:

The trees in Figure 2.6b and c are identical, except whereas the non-terminals in Figure 2.6c are labels of phrasal categories, those in Figure 2.6b are the POS tags of the heads of the subtrees beneath them. For instance, since the phrase factory payrolls is headed by payrolls (NNS) (Figure 2.6a), the nonterminal for the phrase is ɴɴꜱ in the constituency tree (Figure 2.6b). Similarly, in is the nonterminal of in September in the constituency tree because it is the head of the prepositional phrase, and ᴠʙᴅ is the nonterminal of fell in September as well as the entire sentence, because it is the head in both cases.

In Klein and Manning (2004), CCM and DMV are combined using an EM algorithm. At each iteration of the algorithm, to estimate the probability of a new combined tree, the probabilities of the events from both the constituency tree and the dependency tree for the same sentence are multiplied together. The combined model outscores both CCM and DMV, both in terms of directed dependency accuracy and F1 (Table 2.2).

Recent developments

Since DMV was proposed in Klein and Manning (2004), the Stanford NLP group has made significant improvements to it. Spitkovsky et al. (2010) experiment with using training data of increasing complexity (“Baby Steps”), with training data consisting of shorter sentences of at most 15 words (“Less is More”), and with training data consisting of shorter sentences abruptly followed by sentences of unrestricted length (“Leapfrog”). Spitkovsky, Alshawi, & Jurafsky (2011) incorporate constraints involving punctuation to constrain training and inference. The same study also experiments with “lexicalization,” where words occurring 100 times or more in training are presented both by their POS tags as well as their surface forms. Then, as discussed in the last section, Spitkovsky, Alshawi, Chang, and Jurafsky (2011) replace POS tags with word clusters induced by the unsupervised POS-induction algorithm of Clark (2000). The best performance among these studies was achieved by a combination of this model and the punctuation-constrained model, with the additional step of allowing each word to be probabilistically assigned different tags based on context.

Later studies achieve even further improvements. Spitkovsky et al. (2012) describe a model called dependency-and-boundary, where variables carrying information about the boundaries of sentences are included as given variables in parameters. Most recently, in Spitkovsky et al. (2013), the authors experiment with an approach that calls to mind genetic algorithms, restarting the EM training algorithm with new, informed estimates of initial parameter values on the one hand, and on the other hand merging candidate solutions to produce better ones. This latest study reports a directed dependency accuracy rate of 72.0% on WSJ10, almost 30% higher than the 43.2% reported for the basic DMV model in Klein and Manning (2004).

Table 2.2 lists all the comparable evaluation results for all the systems mentioned in this section, as well as the performances of various baselines, a supervised system, and the theoretical upper-bound. It reflects on the steady progress that has been made over the last 15 years in unsupervised parsing, since Klein and Manning (2002):

2.3 Rethinking discrete syntactic models

Based on available evaluations, the overall progress of unsupervised algorithms on the task of syntax-induction is mixed. Benchmarks evaluating unsupervised POS-induction algorithms show that the early systems of Brown et al. (1992) and Clark (2003) outperform more recent systems. On the other hand, in unsupervised parsing, significant progress appears to have been made over the last 15 years.

However, it is important to see this progress in the context of the task in which the progress is being made. At the beginning of the chapter, two drawbacks of the discrete approach were identified: the inability of discrete models to capture the more subtle variations in syntactic patterns, and the difficulty of using high-level linguistic concepts in a computational model. Having examined the origins of these discrete linguistic concepts and their applications in unsupervised algorithms, we can now step back and see these problems more clearly.